(School of Computer Science and Technology,Soochow University,Suzhou 215006,China)

DOI: 10.6043/j.issn.0438-0479.201908042

备注

依赖于大规模的平行语料库,神经机器翻译在某些语言对上已经取得了巨大的成功.然而高质量平行语料的获取却是机器翻译研究的主要难点之一.为了解决这一问题,一种可行的方案是采用无监督神经机器翻译(unsupervised neural machine translation,UNMT),该方法仅仅使用两门不相关的单语语料就可以进行训练,并获得一个不错的翻译结果.受多任务学习在有监督神经机器翻译上取得的良好效果的启发,本文主要探究UNMT在多语言、多任务学习上的应用.实验使用3门互不相关的单语语料,两两建立双向的翻译任务.实验结果表明,与单任务UNMT相比,该方法在部分语言对上最高取得了2~3个百分点的双语互译评估(BLEU)值提升.

Depending on the large-scale parallel corpus,neural machine translation has achieved great success in some language pairs.Unfortunately,for the vast majority of language pairs,the acquisition of high quality parallel corpus remains one of the main difficulties in machine translation research.To solve this problem,we propose to use unsupervised neural machine translation(UNMT).This method can train two unrelated monolingual corpora in a neural machine translation system,and obtain a good translation result.Inspired by meritorious results of multi-task learning in supervised neural machine translation,we explore the application of the UNMT in multi-task learning.In our experiment,we use three unrelated monolingual corpora to create a translation task.According to the experimental results,compared with the single-task UNMT,this method has performed greatly in some language pairs.

引言

机器翻译研究如何利用计算机自动地实现不同语言之间的相互转换,是自然语言处理领域的重要研究内容[1].近些年,神经机器翻译[2](NMT)在很多翻译任务上的效果获得了显著的提升[3],得到广泛的关注和应用.基于序列到序列模型[4]的NMT系统将输入句子编码成向量序列,再使用注意力机制通过一个解码器依次生成目标端单词.2017年,Vaswani等[5]提出一个新的模型结构Transformer,它放弃了传统的循环神经网络(RNN)和卷积神经网络(CNN)而完全依赖于自注意力机制,将机器翻译的性能推向一个新的高峰.

然而,NMT有其自身不可忽视的缺点,它需要大规模的平行语料库作为支撑,而高质量的平行语料往往很难获取,这已经成为机器翻译研究的主要障碍之一.当平行语言对数量不足时,翻译质量会不可避免地大幅下降.对于一些平行数据不足的语言对而言,翻译质量依然有待提高.因此利用更容易获得的单语数据训练机器翻译模型成为新的思路,引发了新的热潮.

2017年,Artetxe等[6]使用无监督的方法实现了跨语言嵌入,训练得到了一个不错的词对词模型.随后,Artetxe等[7]又在此工作的基础上通过降噪和反向翻译的方式生成伪平行语料进行训练,获得了不错的实验结果.2018年,Lample等[8]结合之前的工作[9]将无监督NMT(UNMT)的过程概括为3大原则:1)初始化一个良好的词对词模型.2)利用给定的单语语料,使用降噪自编码器分别训练源语言和目标语言的语言模型.3)使用反复迭代的反向翻译方法将无监督的问题转换为有监督的任务.其中反向翻译过程首先利用当前source→target的翻译模型将源语言的句子x翻译成目标语言y; 再利用target→source的翻译模型将y翻译成源语言x*; 然后将(y,x)当作平行语句对训练,计算x和x*之间的损失,以此来优化模型target→source,反之也可以用相同的方式来优化翻译模型source→target.

受多任务学习中有监督NMT[10]的启发,本研究以无监督学习中的多语言NMT为研究对象.因为不同的语言在词汇上有很大的不同,但是它们在语法和语言结构上却有很多相似的,所以本研究使用多任务学习策略训练多语言UNMT,旨在从不同的目标语言中学习到有用的语义和结构信息.

1 架构与模型

本研究以Transformer为基础架构,为每种语言创建一个编码器和一个解码器,又共享其中的部分层的参数并结合UNMT训练的3大原则在英语(en)、法语(fr)和德语(de)之间同时建立训练任务(enfr、ende和defr),训练得到UNMT模型.例如,当训练en→fr和en→de任务时,由于法语和德语有着相似的语言结构,可以从不同的目标语言中联合学习到有用的语义和结构信息.

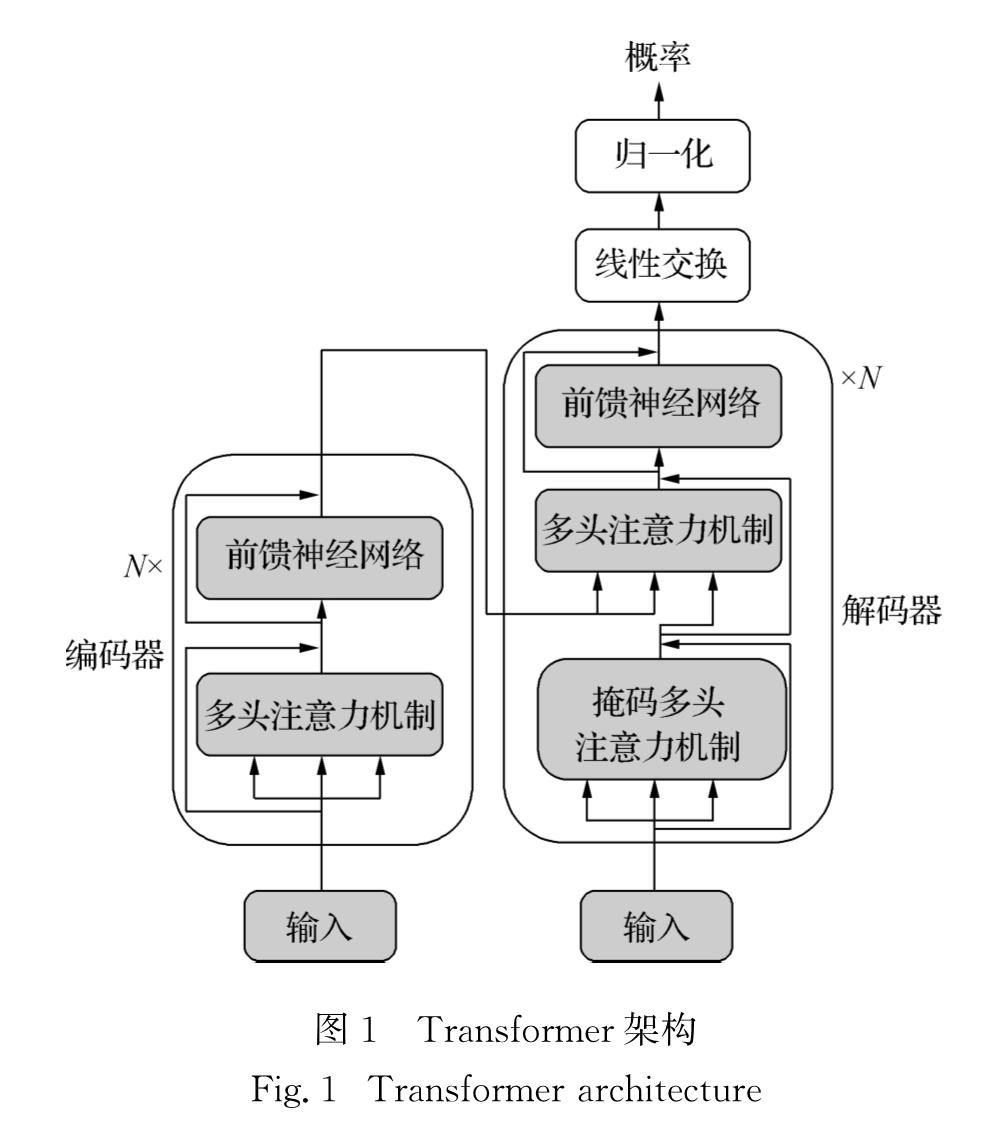

1.1 Transformer架构Transformer的主要特点是它既不依赖于RNN也不依赖于CNN,而仅使用自注意力机制实现了端到端的NMT.自注意力机制是将一句话中的每个词和该句子中的其他所有词进行注意力计算.目的是为了学习句子内部的依赖关系,捕获句子的内部结构.

Transformer架构的结构图如图1所示.Transformer的编码器和解码器都是多层网络结构,编码器和解码器都包含N个相同的层.在编码器中,每一层又包含2个子层,分别是自注意力机制层和前馈神经网络层.而解码器的每一层中,则有3个子层,除了一个掩码自注意力机制层和一个前馈神经网络层外,在它们中间,还有一个对解码器输出的多头注意力机制,子层之间均使用残差连接[11],残差连接的方式可以用式(1)表示:

hl=hl-1+fsl(hl-1),(1)

其中,hl表示第l个子层的输出,fsl表示该层中的函数功能.

1.2 双语单任务UNMT模型双语单任务UNMT模型在训练阶段仅用Transformer结合UNMT 3大原则训练单个训练任务,即en→fr、en→de和de→fr中的一种.

本文分别用S和T来表示源语言和目标语言的句子集合,s∈S和t∈T表示单个句子,Ps和Pt分别表示由源语言和目标语言的单语训练出的语言模型,用Ps→t和Pt→s分别来表示源语言到目标语言和目标语言到源语言的翻译模型得到的预测概率,UNMT的过程主要由如下3步构成.

1)初始化.对模型的初始化大体分为两种方式:第一种方法使用word2vec[12-13]单独训练两种语言的词向量,再通过学习一个变换矩阵将两种语言的词向量映射到同一个潜在空间[6,14].这样就可以获得一个精确度良好的双语词表.第二种方法使用单词的字节对编码[15](BPE)作为子字单元.这样做的好处是在减少了词表大小的同时,清除了翻译过程中出现“未知(UNK)”的问题[16].除此之外,相比于第一种方法,第二种方法选择将两种单语语料混合打乱后共同学习词向量特征,源语言和目标语言可共享同一个词表.

2)语言模型.在双语单任务UNMT模型中,语言模型的降噪自编码器最小化损失函数为

Llm=Ex∈S[-logPs→s(x|C(x))]+

Ey∈T[-logPt→t(y|C(y))],(2)

其中:Ex∈s表示句子x属于s的交叉熵损失的期望; C(x)表示现有句子x加入噪声后的句子,其方法是交换句子中一些词的位置或者删除一些词.语言模型的训练过程实质上是以加入噪声后的句子C(x)为源端输入句子,以初始的句子x为目标端输入句子,把(C(x),x)当作平行句子对进行训练.

3)反向翻译.反向翻译的过程是把伪平行句对当作平行句对进行训练的过程.其训练的损失函数如式(3)所示.

Lback=Ey∈T[-logPs→t(y|u*(y))]+

Ex∈S[-logPt→s(x|u*(x))],(3)

其中:u*(y)表示由目标语言句子根据当前的语言模型翻译获得的源语言句子; 反之,u*(x)表示由源语言句子翻译获得的目标语言句子.反向翻译过程是把(u*(y),y)和(u*(x),x)都当作平行句对训练,将无监督的问题转换成有监督的问题.

反复迭代上述的2)和3),即为完整的双语单任务UNMT模型训练过程.

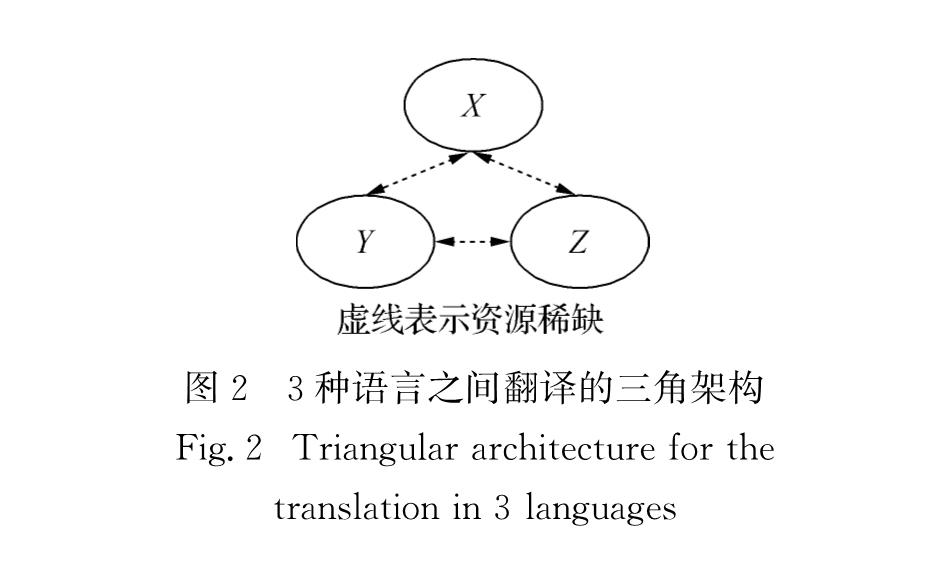

1.3 多语言多任务的UNMT模型多语言多任务UNMT模型即为在Tranformer架构下结合UNMT 3大原则训练多任务获得的模型.具体地,假设当前有3种语言X、Y和Z的单语语料,彼此之前都不是平行的(如图2所示),则多任务UNMT模型包括6项训练任务,分别是X→Y、Y→X、X→Z、Z→X、Y→Z和Z→Y.受Yang等[14]的研究启发,为了在区分每种语言的语义结构的同时,学习到对方所包含的有用的结构信息,本研究为每种语言建立一个编码器和一个解码器,但是共享其中部分层的参数.其参数θ的优化过程如式(4)所示.

L(θ)=arg maxθ[∑N(1/M∑Mi=1log P(xni|yni; θ))],(4)

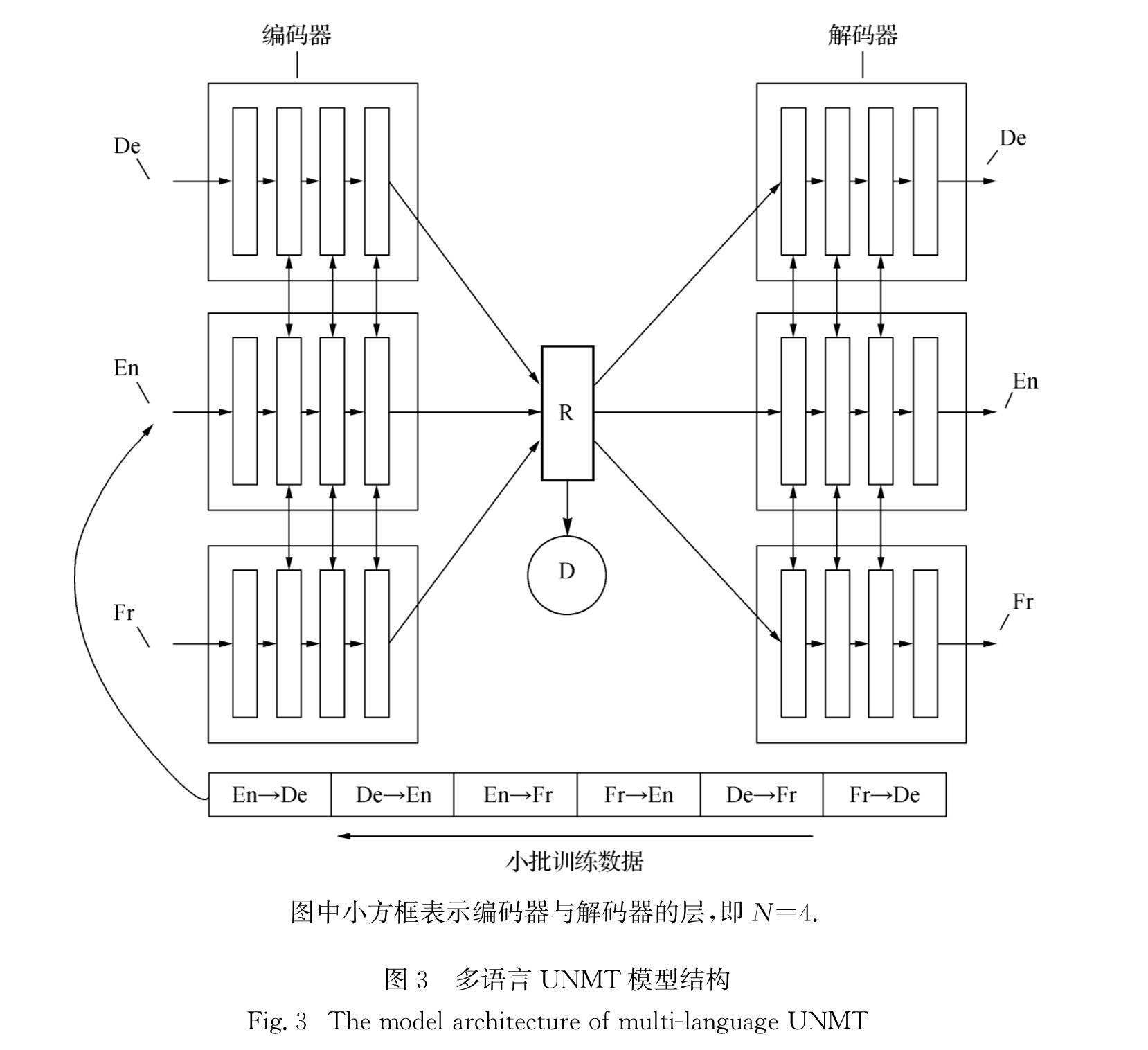

图中小方框表示编码器与解码器的层,即N=4.

UNMT其中:n=1,2,…,6,是翻译任务的索引; M是句子对个数; x和y分别为当前翻译任务中源语言和目标语言的句子.这样的参数设置使不同的语言对之间能够学习到其他语言的有用信息.

本研究的多语言多任务UNMT模型的模型结构如图3所示,模型由如下几部分组成:3个编码器、3个解码器、1个生成对抗网络D[17-18]和1个3种语言映射后共享的潜在空间R.每个编码器和解码器都有4个子层,双向箭头表示参数共享.本研究的模型参数与Lample等的实验[8]保持一致,将编码器的后3层参数共享,解码器的前3层参数共享.

为了强化共享潜在空间R的作用,本研究训练了1个生成对抗网络D用于X、Y、Z 3门语言对应的3个编码器之间建立三分类任务,其作用是预测当前编码语言的所属类别,优化方法为最小化式(5)所示的交叉熵损失.

LD(θD)=-Es'∈X[log P(f=X|EX(s'))]-

Es'∈Y[log P(f=Y|EY(s'))]-

Es'∈Z[log P(f=Z|EZ(s'))],(5)

其中:EX(s')表示当前编码的句子s'经过X语言的编码器的预测结果,s'既可能来自源语言,也可能来自目标语言; θD为生成对抗网络D的参数; f∈{X,Y,Z}; 为了训练这个生成对抗网络,编码器按下式进行优化训练.

LEX(θEX)=-Es'∈X[log P(f=Y|EX(s'))]-

Es'∈X[log P(f=Z|EX(s'))],(6)

LEY(θEY)=-Es'∈Y[log P(f=X|EY(s'))]-

Es'∈Y[log P(f=Z|EY(s'))],(7)

LEZ(θEZ)=-Es'∈Z[log P(f=X|EZ(s'))]-

Es'∈Z[log P(f=Y|EZ(s'))],(8)

其中,EY(s')、EZ(s')与EX(s')的含义类似,θEX、θEY和θEZ分别表示这3个编码器的参数.

2 实 验

本研究对英语、德语和法语3门语言之间的UNMT进行了实验,实验的翻译性能采用评测标准双语互译评估(BLEU)值[19]进行评估.

2.1 实验设置实验训练集的选择与Lample等的实验[8]相同,从WMT2007到WMT2010语料库中抽取英语、德语和法语各1 000万行单语句子作为训练集,选用数据集newstest2012中的deen、enfr和defr作为开发集,选用数据集newstest2013中的deen、enfr和defr作为测试集.

实验选用Adam[20]作为优化器,失活率(dropout)设置为0.1,单词的维度设置为512,最大句子长度为175,超过175个单词的句子会将超长部分截取,训练步长为3.5×105,其余模型参数设置为Transformer模型的默认参数.在3门语言的多任务翻译模型中,共享3门语言的词表并设置BPE操作数为85 000,对子词化后的训练集使用fastText(https:∥github.com/faceboo-kresearch/fastText)工具训练跨语言的词向量学习.

作为对比实验,在传统的双语的单任务UNMT模型中,本文遵循Lample等[8]的设置,共享两门语言的词表并设置BPE操作数为60 000,其余参数设置与多任务UNMT模型相同.

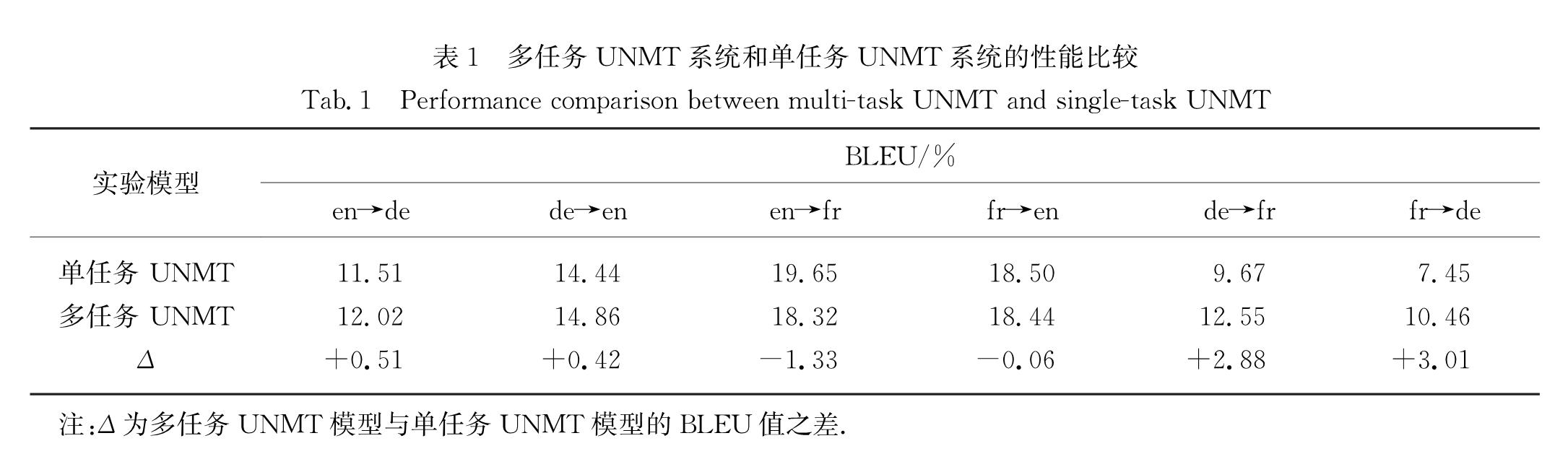

2.2 实验结果表1统计了单任务UNMT模型和多任务UNMT模型在测试集上的翻译性能.从表1可以看出本文的多任务UNMT模型在4项翻译任务上取得了提升,但是提升的效果却有很大不同.在en→de和de→en这两项翻译任务上,测试结果BLEU值提升较少,在de→fr和fr→de这两项翻译任务上,性能提升明显, BLEU值约提升了2.88和3.01个百分点,而在en→fr和fr→en两项翻译任务上,多任务模型的翻译性能反而有所下降.

表1 多任务UNMT系统和单任务UNMT系统的性能比较

Tab.1 Performance comparison between multi-task UNMT and single-task UNMT在显著性测试p=0.01时,本研究提出的模型在en→de、de→en、de→fr和fr→de 4项翻译任务上具有更好的性能.

2.3 实验分析在本文的多任务UNMT模型中,对多门语言采用共享词表,因此选择合适的词表大小显得尤为重要.对此,本文也做了几个实验进行比较分析,实验结果如表2所示.

从表2可以看出,BPE操作数为85 000和90 000时,实验结果相对较好,但两组BPE操作数的BLEU值相差不大,且在某些语言对情况下,BPE操作数为90 000的BLEU值还低于BPE操作数为85 000 的

BLEU值,由此估计当词表大小进一步增加时,对实验结果的提升作用不大.因此在本研究的最终模型中,BPE操作数大小选为85 000.

为了比较训练速度,本研究统计了实验中需要训练的参数.在双语单任务的翻译任务上,参数的数量级均为1.3×108,而在本文的多语言多任务翻译系统中,总参数约为1.7×108.多语言翻译系统的参数数量仅为双语翻译系统的1.3倍,远小于单独训练6个任务的参数之和.与单任务UNMT相比,多任务UNMT模型的训练总时长大约缩短了一半.为了更直观地比较两种模型的翻译性能和收敛速度,在翻译效果改变最明显的de→fr和fr→de翻译任务上,用折线图的方式对比了双语单任务UNMT模型和本文提出的多语言多任务UNMT模型的效果,如图4所示.

multi表示多任务UNMT模型,single表示单任务UNMT模型.

根据实验结果可以得出以下结论:

1)多任务UNMT对于改善翻译性能.确实能起到一定作用,但是对不同语言之间的翻译任务能发挥的效果不同.

2)对于原本翻译效果比较好的语言,多语言多任务UNMT模型的作用可能并不明显,甚至有时候会起到相反作用,而对于原本翻译效果较差的语言,效果则比较显著.这可能是因为训练数据的平衡采样有利于低资源语言对.

3)多门语言之间的UNMT可以在多门语言之间同时建立翻译任务,并且还可以大幅度缩短训练总时长.

3 总 结

本文中分析了多任务学习在UNMT中的应用,提出了在部分语言上有一定效果的训练模型.通过对多个训练任务的联合训练,让源语言在翻译到目标语言的过程中能够从与当前两门语言都不平行的第三门语言的单语语料学习到有用的语义和结构信息.除了翻译性能的提升,本研究提出的模型在一定程度上缩短了多门语言对之间翻译的训练时长.

根据实验结果推测:三门语言共享词表这一方法在一定程度上导致了预测目标端单词的精度下降.在未来的实验中,将考虑在模型中再加入一个生成对抗网络,用于判断当前获得的目标语言是由何种语言翻译而来,这也许会对翻译性能起到一定的积极作用.除此之外,由于多语言的UNMT有利于提升语料资源稀缺的语言的翻译情况,还可以考虑引用拥有第三门平行句对的语言[21],利用高资源的语言对来帮助低资源语言对的学习,改进低资源语言对的翻译情况.

- [1] 李亚超,熊德意,张民.神经机器翻译综述[J].计算机学报,2018,41(12):100-121.

- [2] BAHDANAU D,CHO K,BENGIO Y,et al.Neural machine translation by jointly learning to align and translate[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1409.0473.pdf.

- [3] WU Y,SCHUSTER M,CHEN Z,et al.Google's neural machine translation system:bridging the gap between human and machine translation[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1609.08144.pdf.

- [4] SUTSKEVER I,VINYALS O,LE Q V,et al.Sequence to sequence learning with neural networks[C]∥Neural Information Processing Systems.Montréal:NIPS,2014:3104-3112.

- [5] VASWANI A,SHAZEER N,PARMAR N,et al.Attention is all you need[C]∥Neural Information Processing Systems.Los Angeles:NIPS,2017:5998-6008.

- [6] ARTETXE M,LABAKA G,AGIRRE E,et al.Learning bilingual word embeddings with(almost)no bilingual data[C]∥Annual Meeting of the Association for Computational Linguistics.Vancouver:ACL,2017:451-462.

- [7] ARTETXE M,LABAKA G,AGIRRE E,et al.Unsupervised neural machine translation[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1710.11041.pdf.

- [8] LAMPLE G,OTT M,CONNEAU A,et al.Phrase-based & neural unsupervised machine translation[C]∥Empirical Methods in Natural Language Processing.Brussels:EMNLP,2018:5039-5049.

- [9] LAMPLE G,CONNEAU A,DENOYER L,et al.Unsupervised machine translation using monolingual corpora only[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1711.00043.pdf.

- [10] DONG D,WU H,HE W,et al.Multi-task learning for multiplel anguage translation[C]∥International Joint Conference on Natural Language Processing.Beijing:IJCNLP,2015:1723-1732.

- [11] HE K,ZHANG X,REN S,et al.Deep residual learning for image recognition[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1512.03385.pdf.

- [12] MIKOLOV T,CHEN K,CORRADO G S,et al.Efficient estimation of word representations in vector space[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1301.3781.pdf.

- [13] MIKOLOV T,SUTSKEVER I,CHEN K,et al.Distributed representations of words and phrases and their compositionality[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1310.4546.pdf.

- [14] YANG Z,CHEN W,WANG F,et al.Unsupervised neural machine translation with weight sharing[C]∥Annual Meeting of the Association for Computational Linguistics.Melbourne:ACL,2018:46-55.

- [15] SENNRICH R,HADDOW B,BIRCH A,et al.Neural machine translation of rare words with subword units[EB/OL].[2019-08-01].https:∥arxiv.org/pdf/1508.07909.pdf.

- [16] GULCEHRE C,AHN S,NALLAPATI R,et al.Pointing the unknown words[C]∥Annual Meeting of the Association for Computational Linguistics.Berlin:ACL,2016:140-149.

- [17] BARONE A.Towards cross-lingual distributed represe-ntations without parallel text trained with adversarial autoencoders[J].Annual Meeting of the Association for Computational Linguistics.Berlin:ACL,2016:121-126.

- [18] YANG Z,CHEN W,WANG F,et al.Improving neural machine translation with conditional sequence genera-tive adversarial nets[C]∥North American Chapter of the Association for Computational Linguistics.New Orleans:NAACL,2018:1346-1355.

- [19] BALABANOVIC M,SHOHAM Y.Fab:content-based,collaborative recommendation[J].Communications of the ACM,1997,40(3):66-72.

- [20] KINGMA D P,BA J.Adam:a method for stochastic optimization[EB/OL].[2019-08-01].http:∥arxiv.org/pdf/1412.6980.pdf.

- [21] REN S,CHEN W,LIU S,et al.Triangular architecture for rare language translation[C]∥Annual Meeting of the Association for Computational Linguistics.Melbourne:ACL,2018:56-65.